6 Creative Examples of How Generative AI Is Transforming User Experiences

And the apps that are leading the way

I was recently asked by a friend if I could expand on my thoughts around “AI first UX” becoming big this year, one of the 3 major trends I talked about in my Applying AI Boldly presentation. They wanted to know if I had any specific examples that I could point to of products or features that I felt were doing this well.

I spend a lot of time thinking about this in the product work I’m doing at Google, and there are a couple examples of other products and teams that have launched things that I think are worth talking about.

Some notable examples I’ve come across are:

v0 by Vercel: go from text and/or image to a fully coded user interface

Tome: enter any prompt and make a compelling presentation using AI in minutes.

Arc Browser: an AI powered web browser

MusicFX & ImageFX: generate music and images with a simple prompt

Custom GPTs: a no-code feature of ChatGPT that lets users customize the chatbot

6 AI UX Patterns To Note

These apps span across different use cases and modalities - but the one thing they all have in common is the introduction of new, AI-first user interactions and patterns:

Generative UI

Point to prompt

Inline assistance

Smart chips

Pinch to summarize

Multi-agent chat

1. Generative UI

There are a few products hitting the market these days that enable users to go straight from prompt to UI. Two products that do this especially well right now are v0 by Vercel, and Tome.

With v0 a user can simply enter a prompt and watch as it is transformed into UI, along with the copy-and-paste friendly React code for the generation. In this example, I had v0 generate a resume for myself (I’ve been in the process of hiring and talking to many friends looking for jobs, so resumes are on my mind). Going from prompt to a code-ready template was super simple. Just start with a prompt, and from there 3 examples are generated that you can edit and refine. You can continue to tweak the design and each new iteration is a new version,

Tome allows you to go straight from a prompt to a well designed and thoughtful artifact, such as an image, presentation, or document. In the following example I created a presentation for an upcoming talk I’m going to be giving titled “the art of the possible'', which will focus on new and innovative ways that a company could apply generative AI in the retail industry.

In both of these examples I was able to start with an idea, and have Generative AI do the heavy lifting of producing an initial version of something for me. But it wasn’t the final product. There was still plenty of ideation, iteration, and refinement before I was happy with it.

2. Point to Prompt

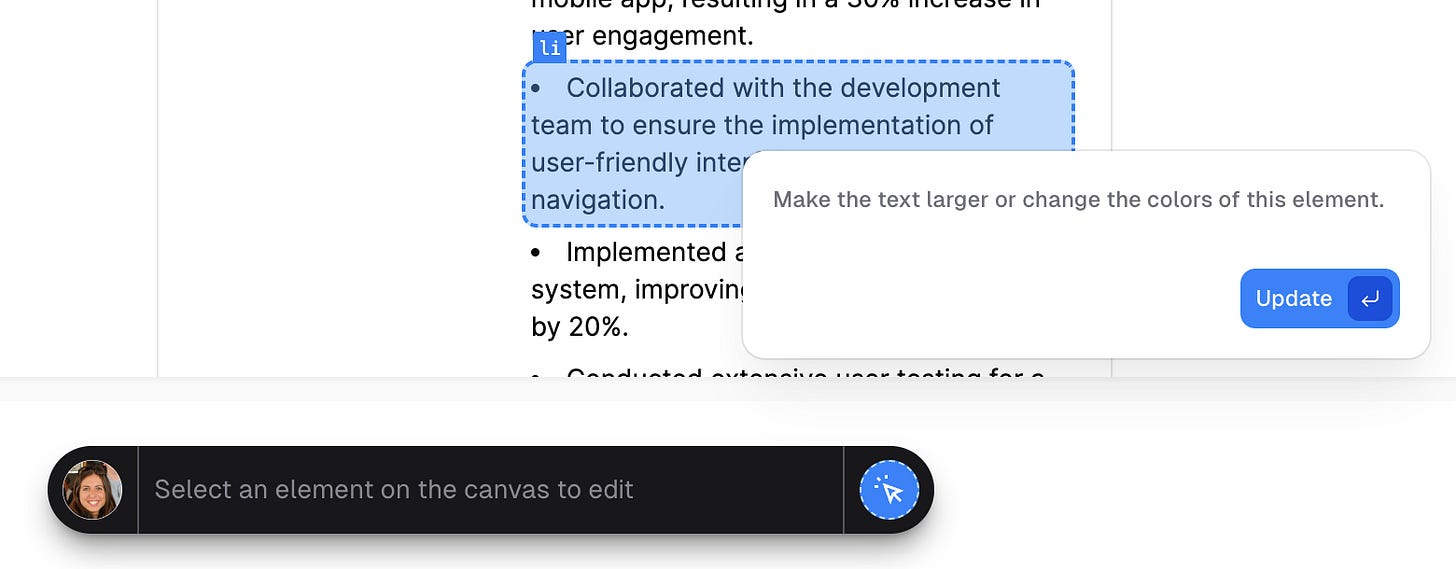

This brings me to another interesting pattern which I’ll call “point to prompt”. After my initial creation in v0, there’s a feature that allows me to simply point at the area on my generated UI artifact and edit it:

3. Inline Assistance

In general, more and more AI powered features will be embedded throughout the workflow. Take Tome for example, you can select text, and apply AI powered edits to the content, or you could use AI to generate a brand new image.

4. Smart Chips

Both MusicFX and ImageFX took a novel approach to prompt iteration by automatically finding the right words / phrases in a user prompt and turning them into smart chips along with generating an output. This allows a user to easily try out different options from a dropdown list, enabling them to easily tweak and edit the initial prompt!

5. Pinch to summarize

Another fun new pattern is what Arc Browser introduced with their pinch to summarize feature. They allow users to to select any website in the Arc Search app and pinch it (a pattern previously used to support zooming out) which will then summarize the page.

6. Multi-agent chat

While I hesitate to refer to custom GPTs as agents (yet), I think that’s generally where things are going, so I’ll lean into that term. Right now, when you have a ChatGPT Plus subscription you can access custom GPTs. This means you can talk to ChatGPT or any of the custom GPTs, directly from the prompt box, at any time.

I think this type of multi-agent interaction and pattern is one that we will see more and more, and an area that I expect more improvements in. While using the current feature is already an improvement now that I can simply call a custom GPT using “@”, it still limits me to just interacting with one.

What’s Next?

While these are some interesting patterns that highlight some of the ways an AI first user experience could look like - this is still very much just the beginning, and I’m excited to see where things go!