How I Think About Agent Design as a PM: 5 Product Frameworks

Key lenses: capabilities, environments, user experience, and the evolving nature of "learning."

The world of AI agents is evolving at a breakneck pace. As product builders, we're not just launching features anymore; we're architecting new kinds of interactions, novel AI-driven functionalities, and often, entirely new product paradigms. To navigate this exciting (and sometimes bewildering) landscape, I find myself returning to a few core product frameworks that help bring clarity to the complexity.

My hope is that by sharing these, you'll find them useful in your own thinking, whether you're actively building agents or just trying to understand where this technology is headed. These aren't rigid rules, but rather helpful lenses through which to examine the challenges and opportunities before us.

Framework 1: The Core Triad - Agent, Environment, Ecosystem

When I first start conceptualizing AI agents, I find it helpful to break the space down into three fundamental components: the agent itself, the environment in which it operates, and the broader ecosystem with which it interacts.

First, let's consider the Agent itself – the core intelligence. What are its fundamental capabilities? This involves understanding its skills and tools, which form its essential toolkit. Beyond foundational reasoning and planning, we need to define what the agent can actually do. Can it navigate the web like Project Mariner? Can it execute complex actions like running code or generating images? This is where its ability to call external APIs (for instance, to book travel or complete e-commerce transactions) becomes critical. An emerging standard, the Model Context Protocol (MCP), is also important here, enabling agents to more easily connect with and utilize a diverse array of tools and data sources, such as GitHub or enterprise systems. Naturally, a richer toolkit means a more versatile and capable agent.

Beyond its active skills, an agent's ability to learn and adapt hinges on its context and memory. What information can it use in the moment, and what can it retain from past interactions? How does this memory persist and inform future actions, making the agent smarter and more personalized over time?

Finally, for the agent itself, you can have a generalist or specialist. Is the agent designed to handle a wide array of tasks, like the Gemini App (which is positioned as your Personal AI Assistant), or will it excel in a specific domain, like Jules (which is an asynchronous Coding Agent)? Generalists offer broad versatility, while specialists can provide superior accuracy and efficiency in their niche.

The second pillar of the triad is the Environment(s) where the agent operates. I like to think about different "planes" of operation: the foreground (on the user's device and in their direct view), the background (still on the user's device but not actively visible), and the cloud (these are virtual machines on a server, independent of the user’s device). The "device" here could be a computer, a mobile phone, or even a wearable. For example, the initial Project Mariner was a Chrome extension, living entirely within the user's local browser (operating only in the foreground). The newer version operates primarily in the cloud, using virtual machines, but it can still interact with the user's foreground browser in a limited capacity, like sending tabs back. This shift in environment is crucial as it unlocks new capabilities, such as background processing and multitasking. Ultimately, a truly seamless agent will likely need to operate fluidly across all three of these planes in a coordinated manner.

Rounding out the triad is the Ecosystem. Most advanced agents are not islands. They will inevitably need to connect with other apps, APIs, and data sources. Understanding these external dependencies and integration points is crucial for building a functional and valuable experience. This isn't just about technical compatibility (which is covered more in the first section); it encompasses product considerations (i.e. what happens when an external integration changes) as well as business model considerations (agents marketing to other agents?). Thinking through these three pillars – Agent, Environment, and Ecosystem – helps me map out the foundational architecture and scope right from the start.

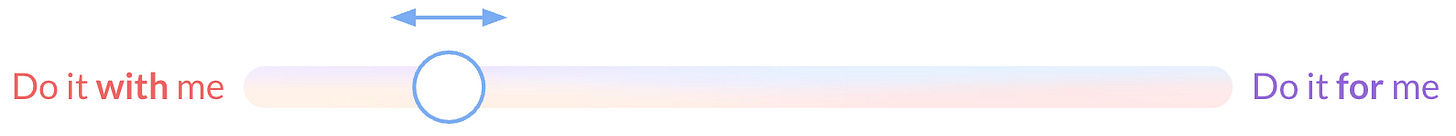

Framework 2: The User Interaction Spectrum - "Do It With Me" vs. "Do It For Me"

Once the basic product architecture and landscape are taking shape, this next framework helps me think through the user experience. Critically, how involved does the user want to be, or need to be, in any given task? This isn't always a binary choice; it's more of a spectrum.

On one end, we have "Do It With Me" scenarios, representing collaborative augmentation. These are tasks where the user remains firmly in the loop. They might enjoy the core process or need to provide creative direction during execution of a job, but could significantly benefit from AI handling tedious sub-steps. A great example is a content creator uploading a new video to YouTube. They might love setting the creative direction and the act of filming, but find the process of sifting through footage for the perfect still frame to include as the default video thumbnail to be a grind. An agent could work with them, suggesting frames based on the video content, with the creator making the final selections and maintaining control. In these "Do It With Me" interactions, the engagement between user and agent typically happens in real-time, almost certainly in the foreground environment we discussed earlier, perhaps with brief hand-offs to the background for specific processing tasks.

On the other end of the spectrum is "Do It For Me" – delegated automation. These are often tasks that are necessary but not particularly enjoyable, or perhaps highly repetitive. For these, users are often happy to offload the work entirely, provided they trust the agent to perform accurately. These tasks cover things that could be:

Time-consuming but necessary: Think about the painstaking process of filling out the endless forms required for sending a child to camp. It's repetitive, with various forms often asking for similar information (address, allergies, emergency contacts), and it's a chore most parents would gladly delegate if they trusted the agent to do it accurately and securely. The value here is pure time-saving and mental load reduction.

Repetitive or rule-based: Many routine business processes, like generating weekly sales reports from a database, categorizing customer support tickets based on keywords, or even scheduling recurring meetings based on team availability, fall into this category. Once the “rules” are defined, an agent can execute them tirelessly.

Requiring extensive information gathering and synthesis: Consider the "Deep Research" features emerging in some AI tools. A user might ask for a comprehensive overview of a complex topic, like "the impact of quantum computing on cybersecurity." In these "Do It For Me" scenarios, the agent goes out, sifts through potentially hundreds of sources, evaluates their relevance and credibility, synthesizes the findings, and then constructs a full research report, often in the cloud, before presenting a summarized or detailed view to the end user. The agent isn't just retrieving information; it's performing the significant cognitive tasks of analysis and structuring.

Better suited for an agent's unique capabilities: Sometimes, a task is best handled by an agent because it can tirelessly process and synthesize large volumes of varied, unstructured information. For instance, imagine an agent continuously triaging and summarizing incoming product feature requests. It could monitor multiple channels – customer support tickets, community forum posts, internal feedback forms, and even social media mentions. The agent could then identify common themes, categorize requests by product area or urgency, flag potential high-impact ideas, and deliver a concise, synthesized summary of the top emerging feature needs to the product team every morning. This allows the PM team to start their day with a clear, prioritized overview, saving hours of manual sifting and ensuring valuable user insights aren't missed in the deluge of daily feedback.

The key psychological barrier for "Do It For Me" scenarios is trust. Users need to feel confident that the agent will perform the task correctly, securely (especially with sensitive data), and reliably. Building this trust often involves clear communication from the agent about its plan, progress (if the task is long-running), and any ambiguities it encounters. It also means providing users with appropriate levels of oversight and the ability to intervene or correct the agent if necessary, even if the primary mode is delegation.

Understanding where a task falls on this spectrum is critical for designing the right user experience. Does the user want a co-pilot in their creative endeavors, or a fully autonomous delegate for mundane tasks? This decision directly informs how to craft the right user experience for the product you are building.

Framework 3: Scope of Operation - Individual Tasks vs. Multi-Step Workflows

Beyond how a user interacts with an agent, it's helpful to think about the scope of operation: are you designing for discrete individual tasks or more complex, multi-step workflows?

An individual task is typically a single, relatively self-contained action or request. Think of an agent being asked to "Summarize this article," "Find me the weather forecast for tomorrow," or "Add eggs to my online shopping cart." These interactions are usually shorter, with a clear, immediate output.

A workflow, on the other hand, usually involves a sequence of interconnected tasks performed over time to achieve a larger, overarching goal. Consider the workflow of "Planning a family vacation." This isn't a single request; it's a journey that could involve multiple distinct tasks, such as:

Researching potential destinations based on criteria like budget, interests, and travel time.

Finding and comparing flight options.

Booking accommodations.

Identifying and scheduling activities.

Creating a shareable itinerary.

Perhaps even gathering feedback from family members at various stages.

A larger workflow often consists of many individual tasks, some of which can be performed entirely by the agent, others requiring user interaction, and some perhaps even handled entirely by the user.

Distinguishing between designing for tasks versus workflows raises several interesting product considerations. For instance, how should the User Experience (UX) differentiate or evolve between initiating a single task versus a longer workflow? Furthermore, for an agent to effectively manage a workflow, it needs a robust way to handle how outcomes, context, and any generated artifacts from one task carry forward into subsequent tasks. This implies a more sophisticated approach to what information gets saved from individual tasks and entire workflows into the agent's memory, allowing it to maintain coherence and learn over time. These aren't just technical challenges; they're core to designing agents that can be truly effective partners in achieving larger goals.

Framework 4: Levels of "Learning" & Personalization

"Learning" can feel like a loaded term in AI - from a product perspective, I find it useful to break it down into at least three distinct layers, each offering a different kind of value for agent products.

First is Global Learning, which focuses on general capability improvement. Here, the agent gets better at performing a task for everyone (usually based on broad data and benchmarks). This is about continuously improving its core competency on universal tasks. Think of this as the classic product feedback and iteration loop, which can be applied to the feature set, as well as at the foundational model or system level. As more users interact with the agent, and as product builders gather more diverse data about its performance across a wide range of scenarios, this information can be fed back into improving the core models, refining prompting strategies, or enhancing the entire product. These improvements are then typically shipped out to all users, raising the baseline capability for everyone. This continuous refinement is essential for an agent to become more robust, reliable, and intelligent over time, especially on common tasks.

Next, there's Group or Organizational Learning, aimed at workflow standardization and optimization within a specific set of users, like a company or a team. For instance, if multiple employees perform a similar multi-step process, such as responding to a customer support ticket, but each does it slightly differently, an agent could learn the most efficient or compliant version and help standardize it across the team. This raises interesting questions: who defines the "best" workflow – an individual, a team lead, or is it derived from observing many? And importantly, is the "best" workflow a new one that also leverages unique agentic capabilities that can be incorporated by introducing an agent into the process?

Finally, and perhaps most powerfully for many applications, is Individual Learning or personalization. This is where the agent adapts to your specific preferences, habits, and even your unique "signature moves." I have a very particular workflow for sending birthday thank you cards for my kids, involving selecting a photo, applying a specific AI artistic effect, and writing a personalized note to anyone who gifted them something. An agent that could learn this multi-step process and then assist or automate parts of it, perfectly tailored to my way of doing things, would be incredibly valuable to me.

For general-purpose consumer use cases, this individual personalization is where agents can truly shine, moving beyond generic assistance to become deeply integrated into a user's unique way of working and living. This relies heavily on robust memory and the ability to adapt based on individual usage patterns. For enterprise use cases, on the other hand, organizational or group learning often holds the key to unlocking significant value.

Framework 5: The "Wow" vs. "Table Stakes" Evolution

There's a fascinating, and sometimes frustrating, tension with AI: what wows users today quickly becomes taken for granted tomorrow.

When we first launched the Project Mariner Chrome extension back in December, seeing an agent click, scroll, and type in your browser felt magical. It was like the "Waymo moment" for agents – a glimpse of self-driving for the web. That initial "wow" of seeing it type text into a box was undeniably powerful.

But soon after, the novelty wears off. The questions shift: "Okay, it can do this, but can I also use my browser now please?" This is natural. Users adapt, and their expectations rise. The cutting edge today is the baseline tomorrow. For anyone building cutting edge AI products, the takeaway is clear: that initial "wow" is fantastic for capturing attention and demonstrating possibility, especially for new users unfamiliar with what’s now possible. But long-term value comes from solving the core user problem effectively and reliably. The "magic" needs to transition into dependable utility. Balancing the delight of the new with the ongoing need for robust core value is a constant, critical act. Perhaps most important is the takeaway that you need to be constantly shipping things right now, because the tech is advancing fast, and what wows today is table stakes tomorrow.

Putting It All Together: From Frameworks to Product

These frameworks aren't exhaustive, of course, but they provide valuable starting points for dissecting the multifaceted challenges and opportunities of agent design. They help ensure I’m thinking holistically about an agent's capabilities, its operational context, the desired user experience, and the dynamic nature of user expectations.

Ultimately, building great AI agents is about understanding your users, the spectrum of tasks to workflows, the nuances of human-AI collaboration, and how to leverage AI to create experiences that are not just functional, but truly delightful and personalized.

What frameworks do you find helpful when thinking about AI agents? I'd love to hear your thoughts!