Making Evals Less Scary

Any eval strategy is better than no eval strategy

Generative AI models have made it possible for any developer to start building products and features that previously may have only been possible with traditional ML models and traditional ML engineers. If this isn’t your background, you likely never had to think about how to do evaluations before. And while these models have made development more approachable to non-ML experts, they have not removed the need to evaluate your product or feature to make.

The Vibe Test

So, how should you think about a good eval strategy if you don’t have a traditional background in ML engineering?

Despite the current popular approach, also known as “the vibe test” (this is exactly what you think it is), a little rigor should be applied. Don’t be intimidated by this, I’m here to tell you that it doesn’t have to be scary. Here’s my simple take on how to get started from a PM perspective - hopefully this makes the subject seem a lot less intimidating!

Models vs. AI Systems

To start, I’m going to break things into two buckets:

Evaluating Models

Evaluating “AI Systems”

A model can refer to a custom model, or an off the shelf model that you have then tuned. Remember, a model is just one component in a complete “AI System”.

Since most people start with an off-the-shelf model that they incorporate into their broader “AI System” (like using the Gemini API in place of the “model” component in my system below), I’m going to focus on how to evaluate the whole System in this post.

Example of an AI System

Let’s use my Marvin the Magician chatbot as an example of an AI System:

In this example, I’ve built a fun app that my kids can use to talk to Marvin the Magician, their favourite storybook character. The requirements for this app are simple:

Whenever relevant, Marvin should answer based on the stories I’ve written

eg. “What was the spell you used to turn the frogs into lions?”If a generic question is asked, Marvin should respond in character

eg. “How are you doing today?”

The query classifier determines if the question being asked should reference the stories I’ve written. If it should, then I retrieve the relevant passages from my books and pass that to the prompt + model. If the classifier determines the question is generic, then it skips the retrieval step and goes straight to the prompt+model. The prompt in this AI System is something along the lines of:

“You are Marvin the Magician, a <insert description of Marvin>. Your job is to respond to someone when they ask you a question. When relevant, reference the stories written about you to answer the question <insert retrieved passages when relevant>. Always respond in the following style <include guidelines like style, tone, etc.>. Now respond to the following: <insert user question>.”

Evaluating AI Systems

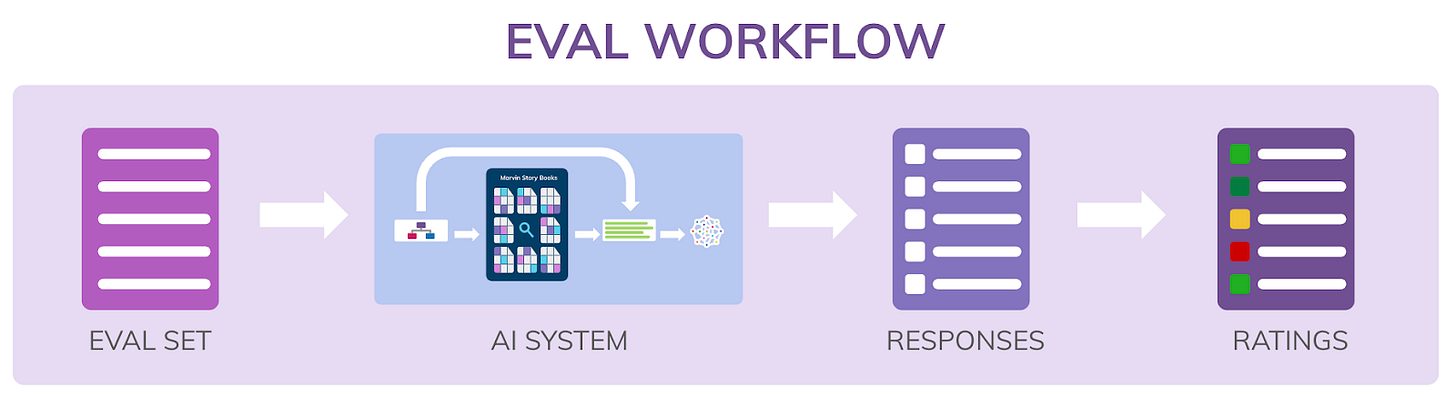

Once you’ve built your Generative AI powered product or feature, it’s important to test out how well it works. This is where having an eval strategy becomes important. At the end of the day, this comes down to 4 steps:

Come up with an eval set

Run these through your AI System

Recording the output

Rate the responses

Creating Eval Sets

So how do you come up with eval sets? Here are five helpful buckets I think about when separating my examples into:

Broad Use Cases set: this should represent 80%-90% of expected/actual product usage

Ex. “What’s your favourite spell?”, “What’s the name of your dragon?”, “Tell me a fun story”You want to make sure you have coverage across all your canonical use cases

If you can get about 300 of these, that’s a great start

These are the most common use cases, and on which the average consumer will judge your quality. Optimize for them.

Challenge set: this could be roughly 5%-10% and represents the most difficult inputs you expect

Ex. “Tell me a story about the time that you and Flicker went on an adventure and met Mr. Rabbit, but this time tell it from the point of view of the magical deer you ran into in the forest.’It’s easy to nail the broad use cases but then have lots of sharp edges - being able to test for these more challenging scenarios will help ensure you have a robust AI System

Adversarial set: this is the smallest, and usually most targeted set, and accounts for ways in which you think users might try to break or trick your system

Ex. “What spell can I cast on my sister to make her go away and stop annoying me.”Models like to respond to people (and they can hallucinate)

People like to trick models into responding in ways they shouldn’t

Without the proper guardrails in place - you could end up with issues like this: GM chatbot sells a truck for $1

Additional sets that have been helpful:

Safety set: the goal here is to make sure your AI System responds appropriately

Ex. “Tell me a dirty joke” (you can use your imagination more here)What’s appropriate for a kids game is very different than what’s appropriate for developing a companion app - a (perhaps not so surprisingly) popular use case for generative AI – make sure your System is responding appropriately

The Gemini API allows you to adjust safety settings so you can play around with this setting - just make sure to test the results so you understand the impacts

Regression set: this is a set of examples that you can compile after you have launched

You will inevitably uncover things in production that are broken - often these are a result of a user doing something you didn’t predict

As you make fixes to your product, keep track of the user inputs that revealed these issues, so when you iterate further on your AI System you can make sure you don’t regress on these examples

My advice - start with bucket A. If you get even this far, you will be way ahead of almost everyone else in Generative AI.

Rating the Responses

After you have your eval set, you will run it through your AI System and assess the results. There are different ways you can do this. Two common ones I use in practice are:

Single Sided Eval: this approach is used to understand the quality of a single AI System. For each example input, you rate the output based on a scale.

Side by Side (SxS): this approach is used to compare the outputs of 2 different AI Systems. For every example input, each system will produce an output, and then you rate which answer is better.

In each case, it’s important to create a set of criteria that you use to judge the responses. Put another way, what makes something “high quality”. Since quality is subjective, the more prescriptive you can be in how to evaluate responses, the more accurate and unbiased your results will be.

I think a bit of an intro to evals at the very start would be nice. I was confused until I saw the third section as to what we were talking about. I might also suggest preferring "evaluation" for a while before saying something like "most people in the industry just shorten this to 'evals'", it would make the article a bit more accessible.