Stop Theorizing, Start Prompting: Build Better AI Agents

Why defining Core User Prompts first is the key to unlocking practical features, intuitive UX, and effective testing.

Building AI agents often feels like navigating a dense fog, doesn't it? Endless debates about the "right" architecture, theoretical capabilities, potential integrations... it's easy to get lost in the abstract tech before you even know exactly what you want the agent to do for the user.

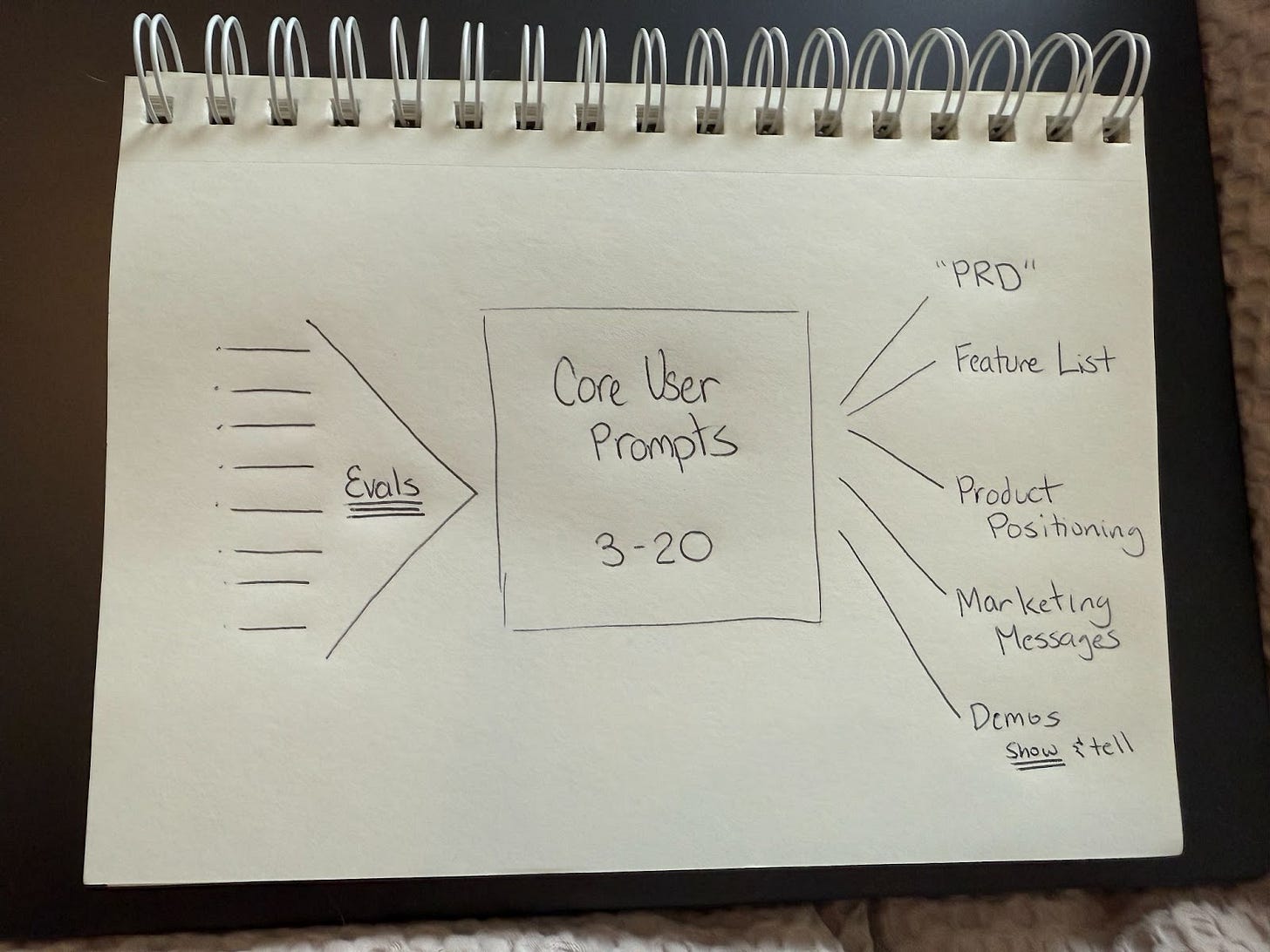

I've been working on a few "Agent-first" products lately, and I've found an incredibly helpful way to cut through that fog: start by defining a set of Core User Prompts (CUPs).

As agents become more prevalent, the difference between a fascinating tech demo and a genuinely useful and effective product often lies in deeply understanding and designing for specific user needs from day one. CUPs force you to do just that. Everything else – features, user experience, even testing – flows naturally from there.

Defining CUPs makes the abstract concrete. It shifts the conversation from theoretical architecture debates to designing something tangible for the actual product you aim to build.

Let's Make it Real: The Personal Meal Planner Agent

Consider a simple example: an agent designed to help with personal meal planning. Instead of starting with database choices or model APIs, let's start with what a user might actually say to it.

Assume these four Core User Prompts represent key things you'd expect this agent to handle:

"Find me a recipe for chocolate chip cookies." (Basic request)

"Here’s a picture of what’s in my fridge. What can I make for dinner?" (Multi-modal input)

"Create a 3-day meal plan for me. I'm vegetarian, love spicy food, and want to try some new cuisines." (Complex generation with constraints)

“Buy these groceries for me.” (Action/Integration – likely as a follow-up)

Suddenly, the agent starts taking shape.

How CUPs Shape Your Product

With these CUPs defined, you can immediately start identifying necessary features and thinking through the user experience.

From Prompts to Features:

CUP 1 ("Find a recipe...") clearly requires basic recipe retrieval or generation based on text input. Simple enough.

CUP 2 ("Here's a picture...") demands more. You need to support image uploads and use a model capable of visual understanding (like a multi-modal model).

CUP 3 ("Create a meal plan...") implies significant generation capabilities. But a great experience requires more:

Saving: Users need a way to save that meal plan to reference later.

Reminders: Maybe push notifications or emails about upcoming meals?

Shopping Lists: The ability to generate a shopping list from the plan is a great addition.

CUP 4 ("Buy these groceries...") points directly to action capabilities and integrations. Does this mean partnering with local grocery stores? Or if you are the store, building this into your existing app?

See how the CUPs directly dictate the required feature set, moving from simple retrieval to complex generation and real-world action?

Designing the Conversation Flow (UX):

Defining CUPs also forces critical thinking about the User Experience (UX) – how the conversation should flow:

Clarification Strategy: When given the fridge picture (CUP 2), should the agent ask clarifying questions upfront? "Do you want to use up the chicken first?" Or should it generate several options and let the user choose? How much back-and-forth is ideal?

Planning Transparency: Before executing an action like buying groceries (CUP 4), should the agent present its plan? "My plan is to price check at stores X and Y, then buy from the cheaper one. I'll substitute organic eggs if regular ones are out. Proceed?"

Interruption Threshold: During a task like adding items to a cart, if multiple options exist (e.g., three types of eggs), should the agent interrupt to ask the user's preference, or make a reasonable default choice to maintain momentum? Where's the right balance between user control and agent autonomy?

These aren't just technical questions; they're fundamental UX decisions prompted directly by considering the core user interactions.

Testing Your Agent: Back to the Prompts

So, you've built your shiny new Personal Meal Planner agent. How do you know if it actually works well? Your CUPs become the foundation for your evaluation set.

Manual Expansion: Brainstorm variations around your CUPs. If "chocolate chip cookies" is a core prompt, test "sourdough bread," "vegan brownies," "quick chicken stir-fry," etc.

AI-Powered Expansion: Use AI to help! Feed your CUPs into a powerful model (like Gemini 2.5 Pro) and prompt it: "I want to create an eval set based on these 4 core prompts. Generate 10 more diverse examples for each one, covering similar intents but with different specifics."

For example, based on CUP 1 ("Find me a recipe for chocolate chip cookies"):

How do I make sourdough bread from scratch?

Find an easy recipe for weeknight lasagna.

Show me instructions for authentic Pad Thai.

I need a vegan brownie recipe that uses black beans.

What's a good recipe for classic French onion soup?

Find a quick chicken stir-fry recipe (under 30 minutes).

Can you give me a recipe for gluten-free pizza dough?

I want to make pulled pork in a slow cooker, find me a highly-rated recipe.

Recipe for a refreshing summer salad with watermelon and feta.

How to bake salmon with lemon and dill?

Doing this for each CUP quickly builds a robust evaluation set grounded in the core functionality you designed for.

Ground Yourself in User Needs

Building effective AI agents requires more than just sophisticated technology. It demands a clear focus on the user and the tasks they want to accomplish.

Defining Core User Prompts upfront provides that focus. It grounds your design, clarifies feature requirements, guides UX decisions, and forms the basis for meaningful evaluation. It’s the most direct path I’ve found to move from abstract ideas to building AI agents that people will actually find useful.

What Core User Prompts define the agent you're thinking about building?

Any thoughts on how best to capture the CUPs and related flows/outcomes, in a multi-agent system? The best option as a PM is to just PoC using Gemini/Claude Code - but there too having a map showing all paths would help...