The Butterfly Effect of Bad Prompting

Why a single vague sentence can now create a mountain of mess.

“Garbage in, garbage out.”

It’s one of the oldest clichés in computing. But with the rapid evolution of generative AI, I’ve realized this phrase doesn’t capture the gravity of the situation anymore. It implies a fair trade: you give a bad input, you get a bad output. A 1:1 transaction.

But the models we are using today have fundamentally changed that equation. We are no longer dealing with simple text generators; we are working with engines of complexity.

A single input no longer leads to a single output. It leads to an exponential expansion.

The Old World: Buddy the Golden Retriever

To see the difference, look back at the early days of LLMs. If you gave a model a lazy prompt like “Write a story about a dog,” you got a lazy result.

You’d get a painfully generic, moralistic story about a Golden Retriever, probably named Buddy or Barnaby, who learns that “friendship is the greatest treasure of all.” It was bland. It was boring. But the damage was contained. It was one paragraph of text that you could easily ignore or delete.

The New World: The Multimedia Cascade

Now, look at what happens today.

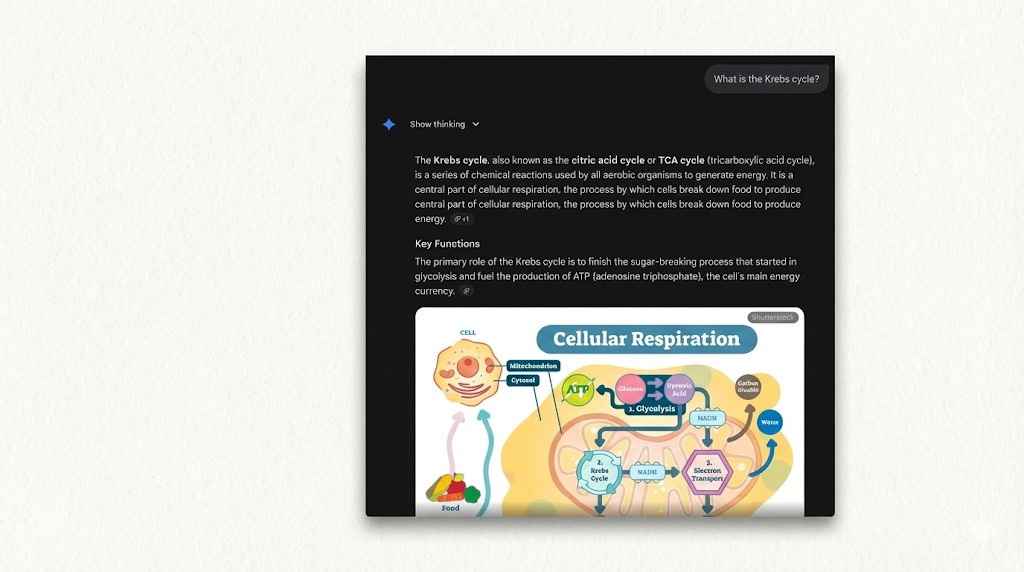

I recently asked Gemini a simple question: “What is the Krebs cycle?”

In the past, this would have returned a paragraph of text. This time? It triggered a multimedia workflow. The response included:

A detailed text explanation.

A relevant image pulled from Shutterstock to visualize the concept.

A generated summary with bullet points breaking down the steps.

Another reference image explaining mitochondria.

Citations linking to credible sources.

A link to a YouTube video for further deep diving.

The model didn’t just answer the question; it constructed a lesson plan. It went from a single sentence of input to a comprehensive, multi-modal output.

This is incredible if your input is good. But it creates a massive problem if your input is bad.

The “Fish and Chips” Problem

Here is the thing about AI products: they have a bias towards action.

Most AI products have been designed to hate a vacuum. If you leave something blank, the app (or agent) will fill it. It will make “reasonable” assumptions based on the average user to get the job done. But “reasonable” doesn’t mean “what you wanted.”

Think of it like walking into a restaurant and simply saying to the waiter, “I want fish.”

With no other instructions, the waiter makes a reasonable assumption and brings you the most popular dish: a basket of Fish and Chips.

But you actually wanted grilled salmon on a salad.

Now you have a mess. You’re peeling the breading off the fish, throwing out the fries, scrounging around the fridge for lettuce, and trying to construct a salad underneath a piece of cod that is already cold. You are spending twenty minutes fixing a meal that could have been perfect if you had just taken ten seconds to order the salmon in the first place.

The Cost of Un-Frying the Fish

Now apply this to “vibe coding” a simple web app.

If you give a vague prompt like “Make me a landing page for my coffee shop,” the AI will build one. It has a bias for action, so it will choose the color scheme (like beige), the font (perhaps sans-serif), the layout, and the copy based on its “reasonable assumptions.”

But maybe you wanted a neon-punk aesthetic with a brutalist layout.

If you don’t specify that upfront, you aren’t just deleting a sentence. You are trying to un-fry the fish. You have to rework the functionality, strip out features, and redesign the style. You end up in a massive time sink trying to reverse-engineer the AI’s decisions.

The friction of creation has gone to zero, but the friction of correction has skyrocketed.

Specificity is Speed

The power of these tools means the responsibility for “craft” has shifted to the very beginning of the process.

You have to be the thoughtful, deliberate controller of the input. You have to provide the constraints. You have to set the tone.

If you take time upfront to think, ideate, and plan - to tell the “waiter” exactly what you want - you save yourself hours of cleaning up the mess later.

So, before you hit enter on that next prompt, pause. Ask yourself: Did I order the fish, or did I order the grilled salmon? Because that one sentence isn’t just a question anymore. It’s the seed for an entire tree of complexity.

Make sure you’re planting the right one.

Did I click because the title said butterflies, and I love butterflies? Yes. Did I stay because it began to talk about the smorgasbord of info that AI outputs, how messy it can get and things to keep in mind when it comes to that? Also yes. Thanks for sharing

Such a useful breakdown. Small prompt mistakes really do cascade into larger errors