The Agent Design Mindset: 5 More Product Frameworks

Diving deeper into proactive design, user identity, and the art of thinking big.

A couple of weeks ago, I wrote about 5 foundational product frameworks I use for thinking about how to build AI agents, covering the core triad of Agent/Environment/Ecosystem, the user interaction spectrum, and more.

Since then, I’ve been thinking about agents even more… and I wanted to share five additional frameworks that I’ve found helpful during this rapid phase of product innovation.

Once again, none of these are meant to be infallible. Rather, they are meant to challenge how you think about agents and present new ways to approach the opportunities unfolding in front of us.

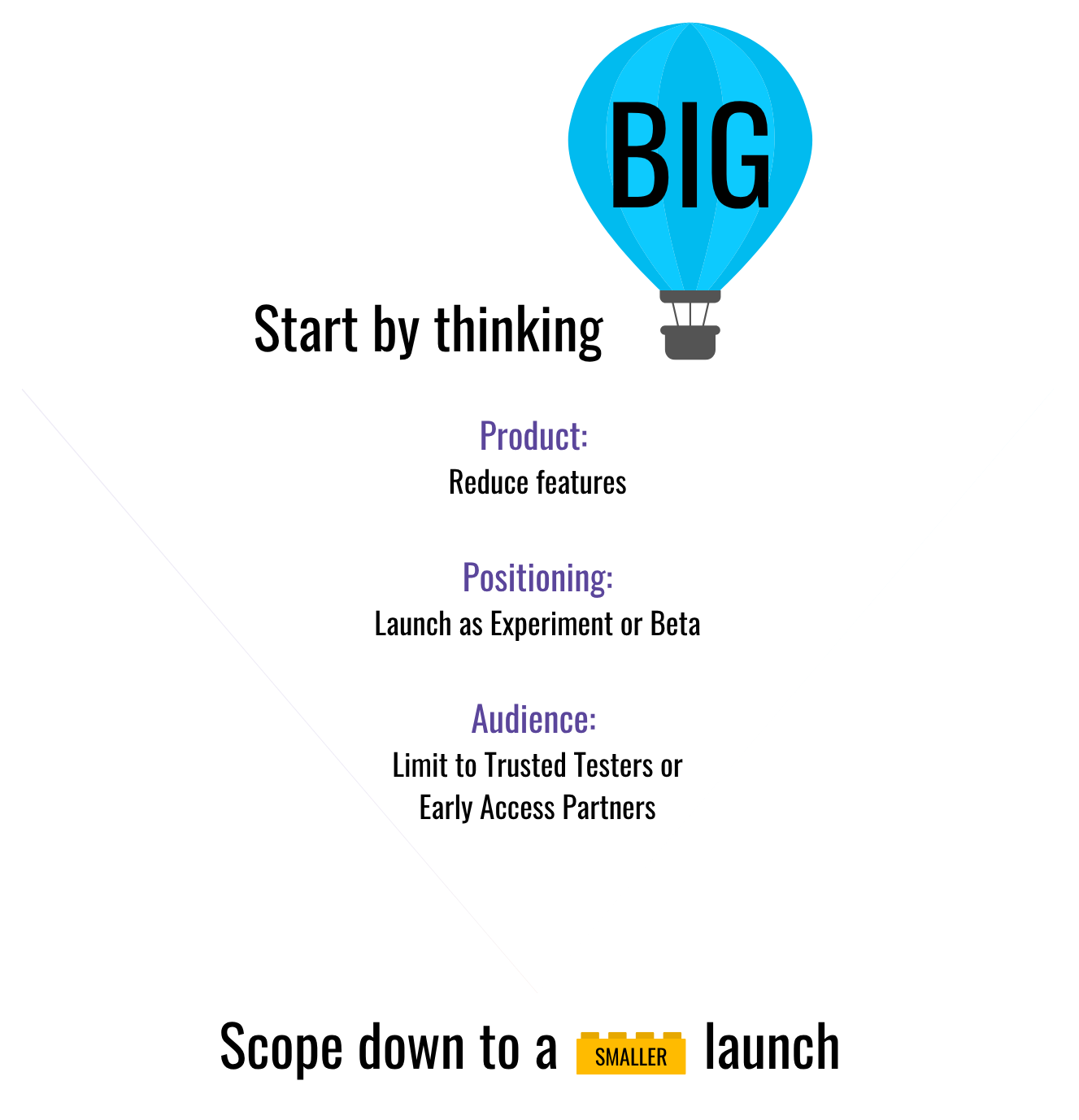

Framework 6: The Inverted Triangle (Thinking Big, Scoping Down)

The rate of progress in AI is undeniable. This often leads me to give product advice that sounds simple but is crucial: "think bigger." This is especially true for agent design. To avoid launching merely incremental improvements, you have to start with a truly ambitious vision.

Then, when it comes to setting launch targets, you can always scope down and ship in iterative milestones. I find it helpful to think along three key dimensions when deciding how to scope down. The most obvious lever is the Product itself: you can reduce the number of features or simplify the complexity of a given feature for the initial launch. Next is Positioning. By explicitly launching as an "Experiment" or "Beta," you signal to users that things might not be perfect yet. This is incredibly useful for features that rely on emergent AI capabilities, as it helps manage the quality bar and frames early user feedback as part of a collaborative journey. Finally, there's the Audience. You can launch to a smaller, more targeted group at first, which lets you get the product into real hands quickly and gather feedback without opening the floodgates to everyone at once.

This is distinctly different from starting with a "realistic" launch goal and planning to add features later. The Small → Big approach often traps you in a cycle of local optimization, preventing you from fundamentally rethinking the problem space. By starting with an ambitious North Star, you ensure that even your first, scoped-down launch is a meaningful step in the right direction, not a detour.

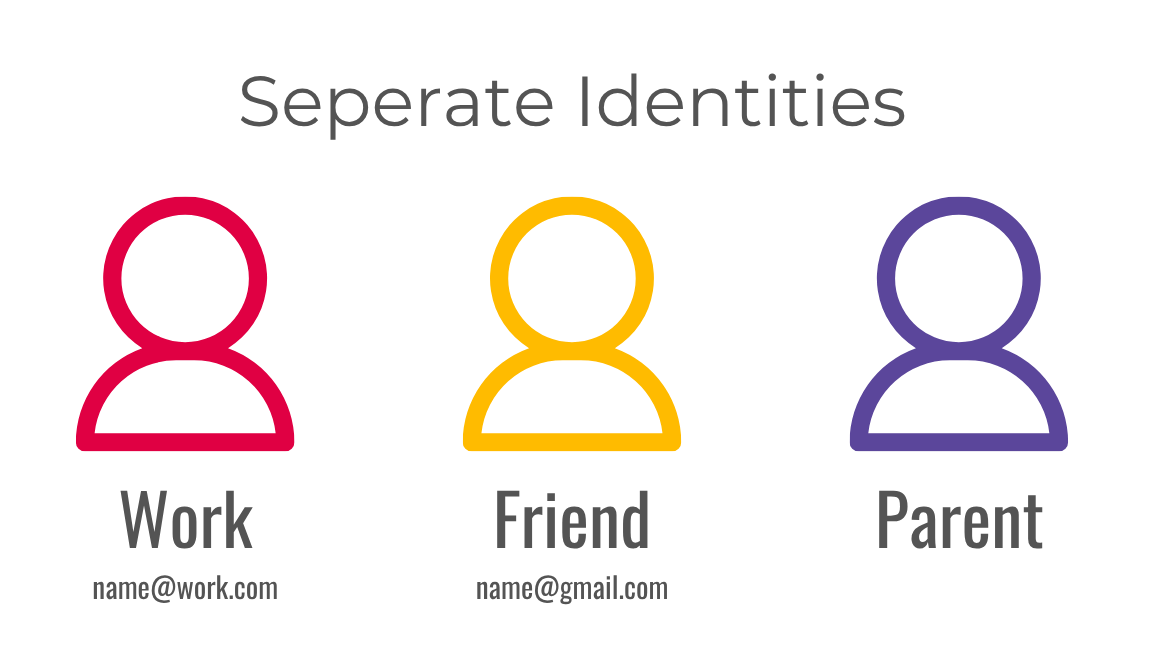

Framework 7: Rethinking Identity (From Silos to Context)

In my early PM days on the Google Assistant, we thought about a user's identity in distinct, account-based silos: for example, you could have your personal account and also your work account. Today, that model feels outdated.

Our identities are fuzzier and more fluid than simple accounts can capture. You have the version of yourself your friends know, the version you present at work, a "parent" hat for dealing with school logistics, or perhaps a public persona for social media. And it goes even deeper: even within your "work" hat, you're a slightly different person when brainstorming with a close peer versus presenting to a VP. These aren't separate people; they are different facets and subtle calibrations of a single person, YOU, activated by the specific context of each interaction.

The old way:

The new way:

This shift from siloed accounts to a single, context-aware identity is a fundamental change for how we should design agents. One that allows for even more nuance and meets the user where they are. I see this in my own life:

My professional voice varies between LinkedIn, Substack, and X.

My @AIMomPlaybook Instagram account has a different tone than my personal one.

My "parent" hat is reserved for school chats and parent-teacher emails.

My work hat changes depending on whether I'm talking to peers, a potential hire, or a startup founder.

An agent that understands these contextual facets can be way more helpful. This also adds another dimension: what we're willing to share and what permissions we grant should also be context-dependent.

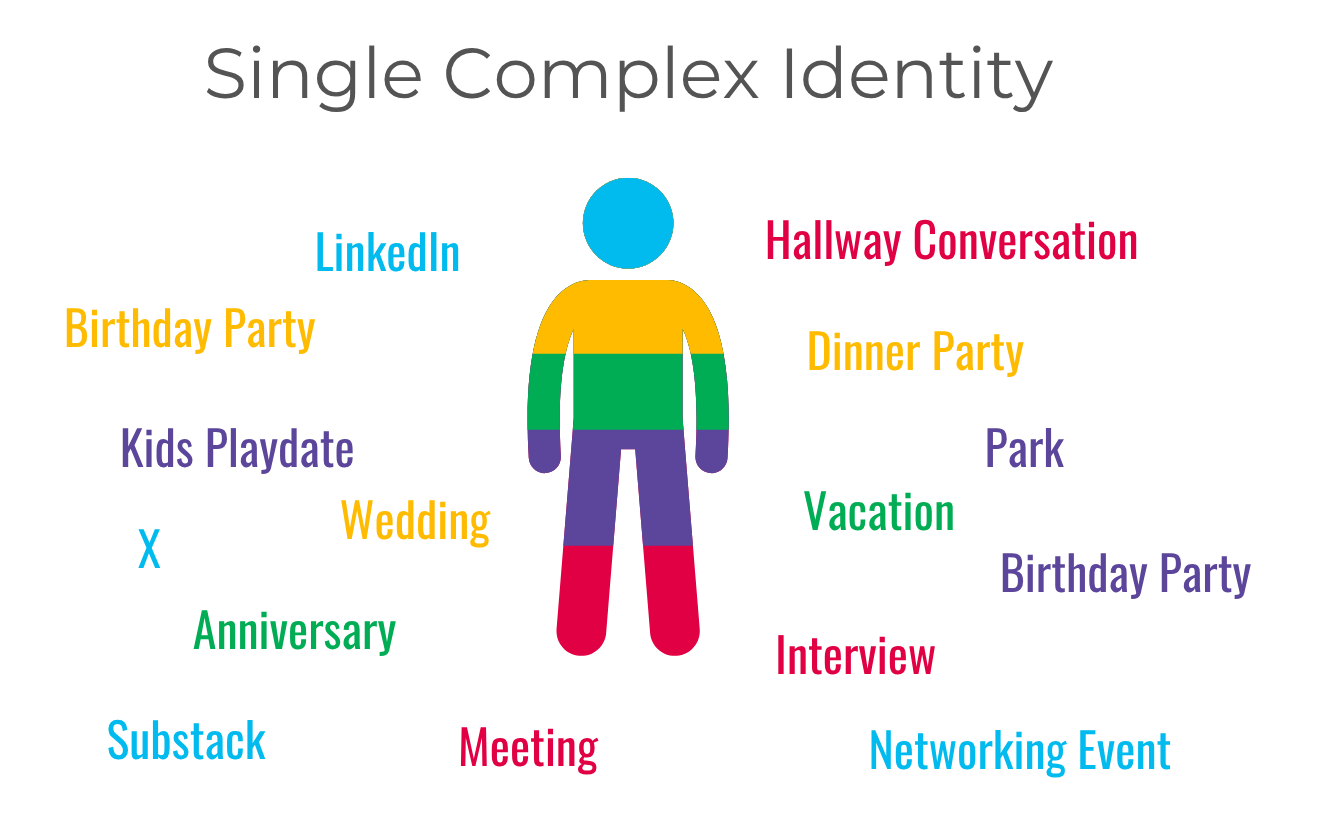

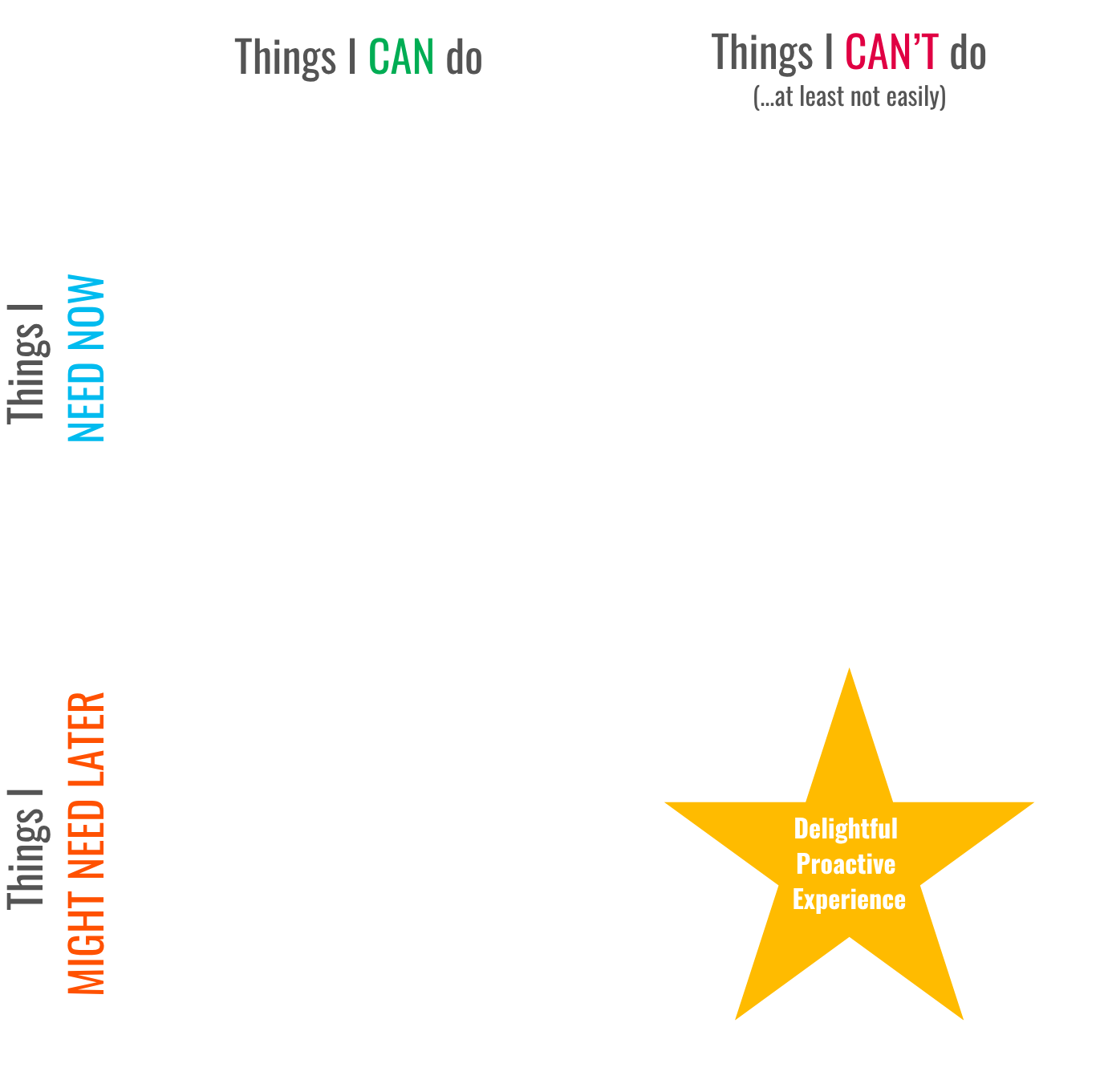

Framework 8: The Proactivity Quadrant (Helpful vs. Annoying)

There’s something magical about an agent that proactively intuits what you need and delivers it before you even ask. That is the dream. The other side of that coin is…Clippy.

The goal is to be proactive in a way that is helpful, not intrusive. A colleague and I were discussing this, and I found myself quickly pulling out a post-it note and sketching up this helpful quadrant-based approach to prioritizing proactive opportunities:

In the top row are tasks a user will simply do themselves when an active need arises. Here, proactivity is a moot point because the user is already in motion. They may still use AI to help them accomplish the task, but it’s more "reactive" than it is “proactive”.

The bottom-left quadrant contains tasks where an agent could be proactive, but because a user can easily do it themselves, the bar for getting it perfectly right is incredibly high. The magic is diminished.

The real magic happens in the bottom-right: tasks that are hard for a user to complete on their own.

Take a real-world scenario: planning a team offsite. It’s a task that seems simple but quickly becomes a complex mess of logistics. You have to find a venue that's available, research activities that cater to different personalities, find restaurants that can seat a large group, and juggle it all within a budget. It's a coordination headache that often gets pushed to the last minute.

Imagine an agent sees "Team Offsite Planning" on your to-do list or calendar. It knows your team size and has access to their general availability. The next morning, it proactively delivers a link to a custom, interactive webpage titled "New York Offsite Proposal." This isn't a static document; it's a dynamic planning hub that includes:

Three potential venue options, complete with photos, pricing, and confirmed availability for your dates.

A proposed agenda with a mix of work sessions and suggested team-building activities (e.g., "Brewery Tour" vs. "Escape Room").

A list of recommended restaurants with links to menus and reservation options.

A real-time budget tracker at the top that updates as you select different options.

A "Share with Team for Feedback" button that lets your colleagues vote on their preferred activities.

That would feel like pure magic. It transforms a daunting, multi-step coordination problem that you’ve been dreading into a simple, delightful experience of making choices.

Framework 9: Memory & Personalization (Implicit vs. Explicit)

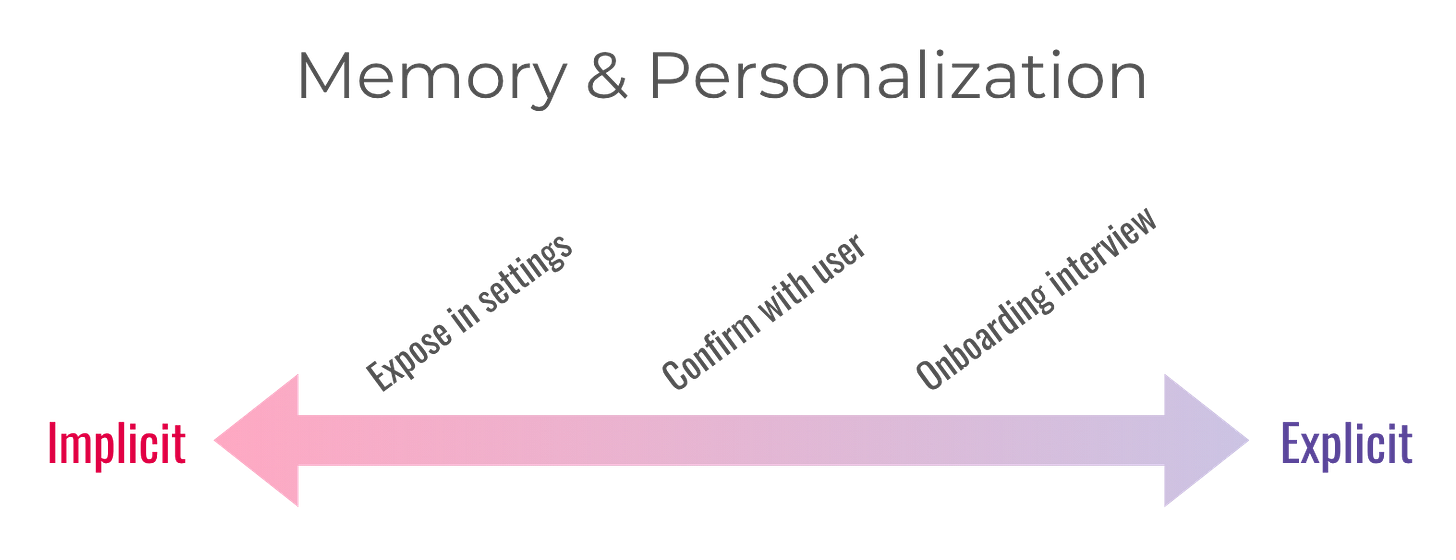

As a user engages with an agent, how should it remember things about them?

Implicit Memory: This is what the agent learns by observing your behavior over time. It should know I love salads because I'm constantly looking up recipes and building a cookbook. It should know I have three kids from my party-planning queries. This is powerful because it requires no extra work from the user; the agent just gets smarter. The risk? It can be wrong (maybe I was researching sushi for a friend, not for me) or incomplete (I also really love Korean food).

Explicit Memory: This is information the user directly provides, perhaps in a settings panel or through a fun onboarding dialogue ("What are your hobbies?"). This is more accurate but requires more user effort.

It’s important to balance user friction with accuracy. The best experiences could involve a mix of both approaches to help you strike the right balance. An agent might learn something implicitly, then ask for confirmation ("I've noticed you're interested in vegetarian recipes. Should I save that as a preference?"). It could also provide a space where a user can view and edit what the agent "knows" about them, focusing on high-stakes facts like dietary allergies over simple food preferences.

Framework 10: Recommendations & Recall (To Show or Not to Show)

When an agent uses personal context to provide a recommendation, should it say so? Years ago on the Assistant team, we debated this. If my husband and I ask for "the best restaurants nearby" and get different answers (a taco place for me, Italian for him), would that erode trust or build it?

There's a fascinating trade-off here. On one hand, being transparent about why a recommendation is being made can build significant trust and show the agent is paying attention. On the other, it can clutter the UI and feel robotic if not done well.

Instead of a single right answer, I see a spectrum of approaches, best illustrated by looking at a couple of products that handle this in different but effective ways: LinkedIn and Netflix.

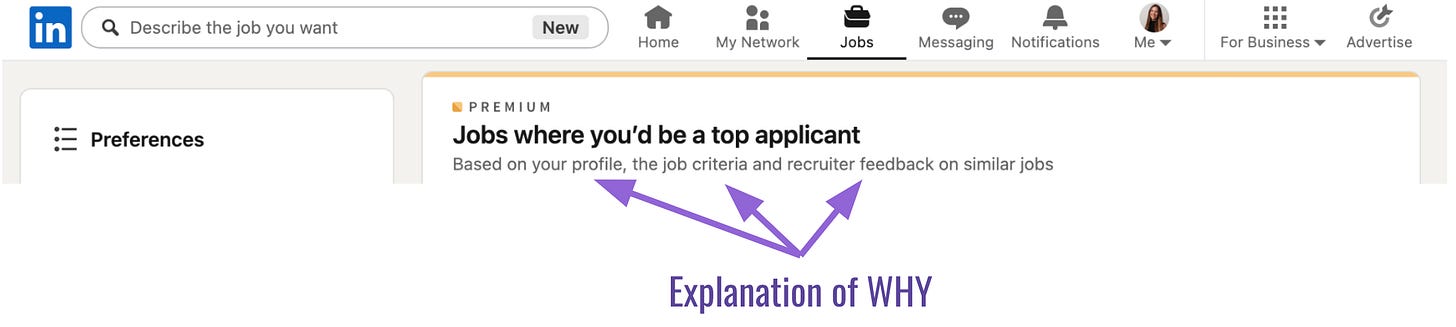

The LinkedIn Approach: Explicit Transparency

For high-stakes decisions, like job applications, transparency is key. LinkedIn does a great job of this by explicitly stating the reasoning behind its personalized recommendations.

By telling the user "Based on your profile, the job criteria and recruiter feedback...", LinkedIn turns a black box into a clear value proposition. This approach is effective because it directly answers the user's unstated question: "Why are you showing me this?" It makes the personalization feel earned and credible.

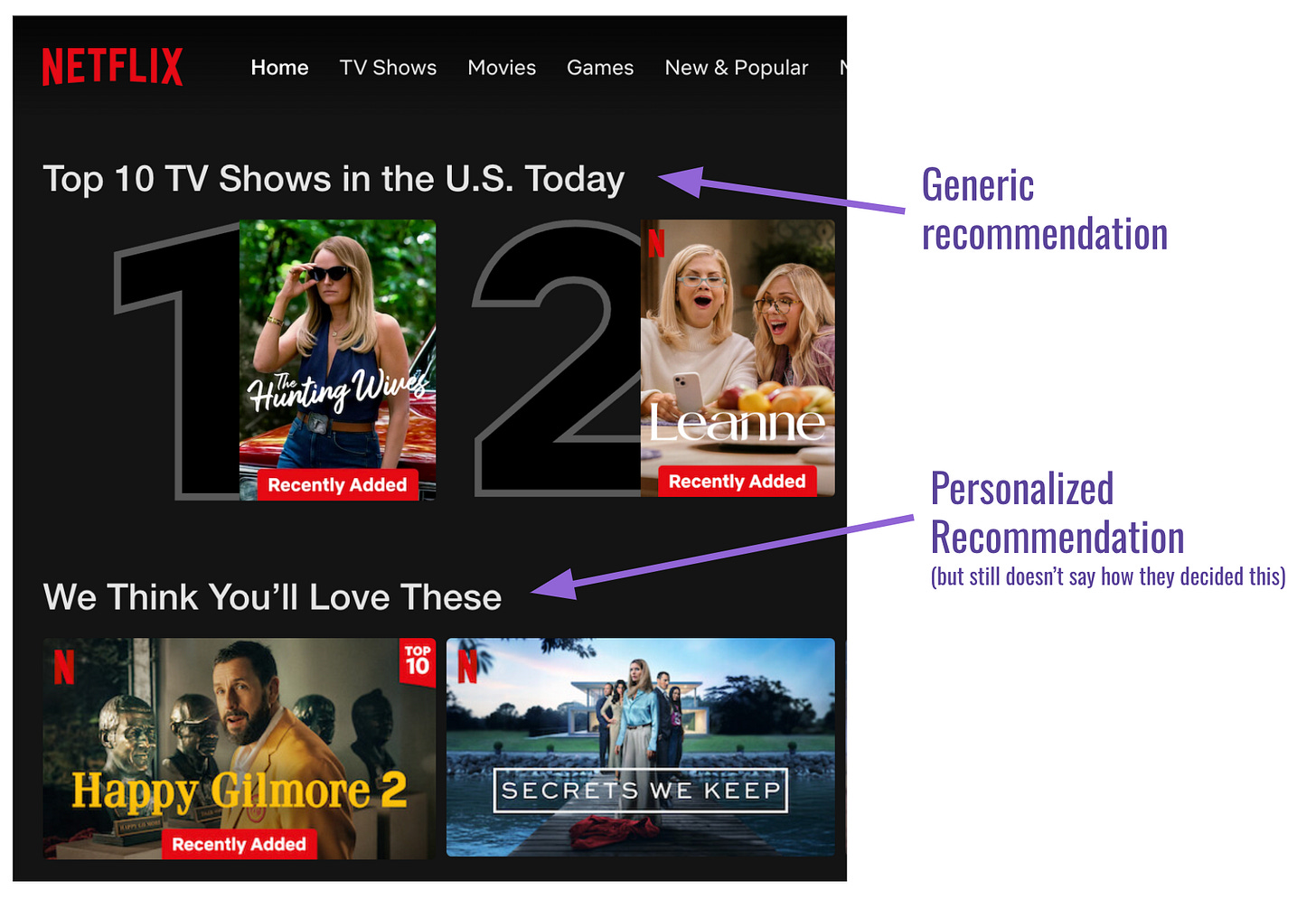

The Netflix Approach: Implicit Personalization with Explicit Framing

For lower-stakes, high-volume recommendations like what to watch next, spelling out the "why" for every suggestion would be overwhelming. Netflix cleverly solves this by using explicit framing to separate personalized content from generic content.

Rows like "Top 10 TV Shows in the U.S. Today" are clearly generic, based on popularity. But a row titled "We Think You'll Love These" is an explicit signal to the user: "This next set is just for you." It doesn't need to say, "Because you watched three sci-fi movies..." The framing itself implies a personalized context, creating a feeling of being understood without over-explaining.

The Conversational Agent Approach: Seamless Context

The most elegant approach, especially for conversational agents, might be to weave the context naturally into the response itself. Instead of a clunky label, imagine an agent replying: "You had pasta last night, so how about changing it up with a Niçoise salad tonight? Here’s that recipe you starred last month." That kind of response combines the clarity of LinkedIn's "why" with the seamless feel of Netflix's personalization, demonstrating intelligence without breaking the conversational flow. Even still, some may find this answer too verbose, and prefer a straight-forward response like “how about a Niçoise salad.”

Ultimately, the right choice depends on the context of your product and your users. Is the decision high-stakes? Be explicit like LinkedIn. Is it low-stakes and high-volume? Frame it like Netflix. Is it conversational? Weave it in naturally when it makes sense.

Putting It All Together: The Agent Design Mindset

These additional frameworks, like the ones before them, aren't an exhaustive checklist. Instead, they serve as mental models to help dissect the multifaceted challenges of agent design, from high-level strategy (thinking big with the inverted triangle) to the nuanced details of a single interaction (like proactive recommendations or contextual identity). They help ensure I’m thinking holistically about how to build products that feel not just capable, but truly intelligent and user-centric.

Ultimately, building the next generation of truly helpful agents requires more than just technical execution. It demands a forward-looking mindset that starts with an ambitious vision, a deep empathy for the fluid nature of user identity, and a thoughtful approach to proactivity and personalization. It's about designing systems that can adapt, remember, and collaborate with users in ways that genuinely make their lives easier and more creative.

These are some of the mental models I'm using to navigate this space. What are your go-to frameworks or principles for building in this new era? I'd love to hear your thoughts.